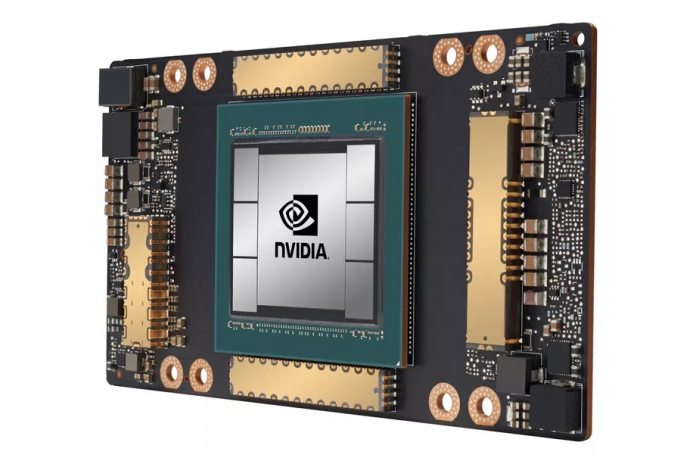

Called the A100, the new Nvidia Ampere GPU is part of the companies drive into cloud computing. Considering the ongoing COVID-19, CEO Jensen Huang told reporters at GTC 2020 how important it is to be part of the data business. “Those dynamics are really quite good for our data center business … My expectation is that Ampere is going to do remarkably well. It’s our best data center GPU ever made and it capitalizes on nearly a decade of our data center experience.” In terms of power, the A100 has over 54 billion transistors and Huang says Nvidia reached “nearly the theoretical limits of what’s possible in semiconductor manufacturing”. Certainly, the GPU is the world’s largest 7nm chipset.

Stacking GPUs

Elsewhere, the new Ampere GPU has 40GM of memory, 6,912 CUDA cores, 19.5 teraflops of FP32 performance, and 1.6TB/s of bandwidth. Yes, in other words, it’s a beast. Nvidia’s focus is on combining A100 chips to create a stacked system based on artificial intelligence that will run its datacenters. The company will use its NVLink technology to combine 8 A100s for a total of 320GB of GPU memory and 12.4TB/s of memory bandwidth. “If you take a look at the way modern data centers are architected, the workloads they have to do are more diverse than ever,” explains Huang. “Our approach going forward is not to just focus on the server itself but to think about the entire data center as a computing unit. Going forward I believe the world is going to think about data centers as a computing unit and we’re going to be thinking about data center-scale computing. No longer just personal computers or servers, but we’re going to be operating on the data center scale.” Nvidia’s new Ampere architecture is already shipping to organizations and has helped with COVID-19 research conducted at the US Argonne National Laboratory.